Kolena aims to redefine quality standards in ML 🎛

Plus: Founder Mohamed on AI testing, evals and multimodal...

Published 29 May 2024CV Deep Dive

Today, we’re talking with Mohamed Elgendy, CEO and Co-founder of Kolena.

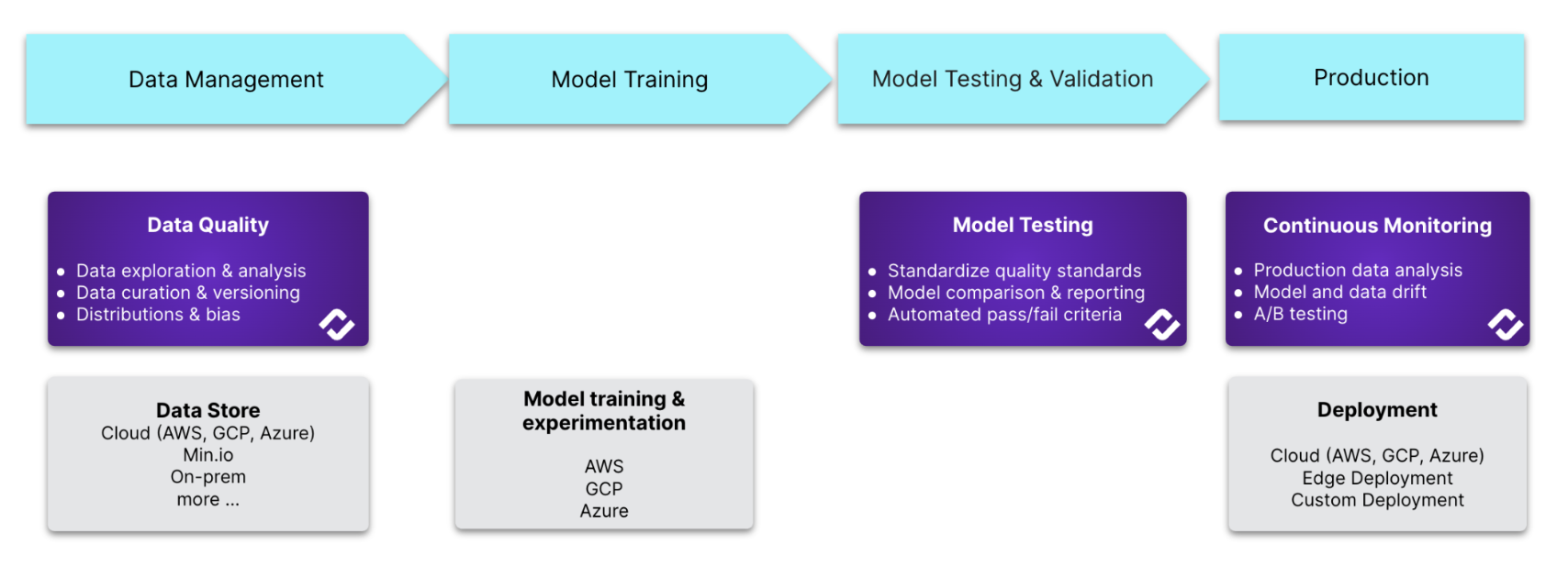

Kolena is a comprehensive ML Testing and Validation Platform offering both granular and end-to-end capabilities. Founded by machine learning engineers passionate about AI Quality, Kolena is on a mission to define new quality standards for ML. Mohamed co-founded the company with Andrew Shi (CTO) and Gordon Hart (CPO). Previous to Kolena, the founding team built AI products and infrastructure at Amazon, Rakuten, and Palantir.

Kolena approaches testing in a unique way - instead of focusing on aggregate statistics for a model performance, Kolena helps ML engineers isolate granular problem areas to reduce the real-life failure rate in production models. Additionally, the company touts their ability to test the entire model pipeline, including pre and post-processing, together rather than just in individual components.

Today, Kolena is working with companies including John Deere, Rad AI, and Kodiak Robotics on mission critical use-cases where minimizing model failure is paramount. The company raised its $15M Series A last September led by Lobby Capital with participation from SignalFire, Zero Prime Ventures, Bloomberg Beta, and 11.2 Capital.

In this conversation, Mohamed chats with us about the importance of robust testing for mission-critical AI, how Kolena came to be, and where AI testing is going in the future.

Let’s dive in ⚡️

Read time: 7 mins.

Our Chat with Mohamed 💬

Mohamed - welcome to Cerebral Valley! First off, give us a bit of background on yourself and what led you to co-found Kolena?

Hey there! I’m Mohamed, co-founder and CEO of Kolena.

Prior to Kolena, I managed machine learning teams for about 7 years. I worked on vision and robotics problems at Amazon and Synapse, including building weapon detection systems for the TSA and DoD. I also tackled language problems at Twilio, focusing on natural language understanding, and at Rakuten, I dealt with time series problems, alarm detection, and preventive maintenance.

Machine learning faces non-deterministic challenges, unlike traditional software where you assert your functions and can ensure it’s working 100%. In ML, we lack a clear scope of capabilities, complicating test coverage. For example, while building weapon detection systems in x-ray machines, we might claim 95% accuracy, but what does that mean under different conditions like occlusions, lighting, or weather? Similarly, in language processing, performance can vary based on race, gender, and geography, while recommender systems face their own unique challenges. Despite extensive testing, there's always uncertainty - we might spend weeks evaluating models only to remain unsure about which is better.

This highlighted the core problem: the lack of a reliable framework for testing machine learning models. This led us to Kolena. While I was writing a book on computer vision, the three co-founders started thinking about a framework to properly test and validate models. This idea evolved into the Model Quality Framework, which helps in unit testing for machine learning.

Our unit testing involves breaking down data into scenarios to test specific characteristics. For example, to test for gender bias, we group data points by gender. This approach applies to structured data, images, and text. By capturing scenarios in unit tests, we can eliminate lengthy debugging times. Instead of comparing aggregate metrics, we compare specific scenarios, such as model performance for a male user from a particular region. This precision allows us to push the best model into production and identify exact failures for targeted improvement. This method transforms the experimental nature of model improvement into an engineering discipline, ensuring reliability and precision in machine learning model development.

Give us a top-level overview of Kolena – how would you describe it to the uninitiated developer or ML team? And who’s finding the most value in what you’re building?

We define Kolena as a test and validation platform that ensures you are building the right product for the right use cases. We track these use cases from the data side. Your data has to capture the right use cases and then trace that into data quality and model quality tests. We also provide model monitoring to ensure the model's performance aligns with the intended use cases. With Kolena, you are monitoring the model performance against the critical use cases that you'll build your product for.

On the user side, we’re focussed heavily on what we call ‘mission-critical enterprises’. We're obsessed with these types of use cases. We haven't yet built an offering for smaller teams, such as academia researchers or smaller innovation units inside enterprises that aren't building a product. Our focus is on mission-critical applications and AI teams building products for specific purposes.

Could you expand on a bit on what you mean by mission-critical enterprises? Feel free to share any success stories.

Absolutely. When we say mission-critical, we mean teams where model quality is crucial. For some projects, like quick prototypes or non-critical toy projects, rigorous testing isn't necessary. That said, for mission-critical applications, testing is vital. For example, one of our customers, John Deere, is building autonomous tractors. We also work with Kodiak Robotics and Mercedes on autonomous trucks.

These applications are mission-critical because they involve life-threatening use-cases. Our work spans perception, language, generative AI models, and sensor fusion. Success stories include Rad AI cutting around 70-80% of model failures in production within the first few months. If a model is 99%, that last 1% of production failures is a long tail of edge cases. By cutting 70% of those, we essentially boost model performance to 99.7%.

Across our customers, we see two main improvements: model performance and team productivity through automation. By breaking down data into unit tests, teams can configure critical and important tests, allowing for continuous integration (CI) automation. Instead of manually testing each model, they can run multiple experiments and focus only on those that don't regress in critical scenarios.

For example, Plus One Robotics, with just one or two machine learning engineers, builds and tests over 20 models a week, thanks to this automation. This scalability and efficiency are what make Kolena a powerful tool for mission-critical AI applications.

The space of ML evals has surged in popularity recently – what is Kolena doing differently to some of the other players in the space?

We have two main differences. First, we focus on granularity, which unblocks trust by showing test coverage and communicating product capabilities. Second, we focus on being able to test the entire system or pipeline of models. This is crucial because, in AI products, a model is just one part of the system. There are many pre-processing and post-processing models involved. Testing each component individually doesn't guarantee the best system performance. We ensure that each component is tested while also focusing on end-to-end system testing.

It seems like a lot of your current focus is around robotics and autonomous vehicles. Are there other sectors or use cases you’re excited about?

Definitely! I'll share two examples: Rad AI in the generative space and AssemblyAI in the audio space. Rad AI is revolutionizing healthcare by using generative AI to extract insights from radiologist diagnoses. They started with NLP and evolved into generative AI, seeing similar improvements—cutting 70-80% of model failures and boosting productivity by 10-15 times within a few months.

AssemblyAI, a leader in audio transcription, showcases our platform's multimodality. Kolena is agnostic to model type and data type, whether neural networks, traditional decision trees, or generative models. We've built a multi-model data engine to handle any data type, breaking it down into test cases and building comprehensive test coverage.

From robotics to language to audio, Kolena's versatility is something that really sets us apart.

You mentioned multi-modality. Give us a rough roadmap for Kolena and how multi-modality plays into that?

We're excited about two main things. First, the multimodality piece will grow exponentially. The way we interface with software is evolving from single input types to integrating various sensors. For example, VR sensors tracking eye movements feed structured data into the system. Multimodality will become the standard for machine learning.

Second, we're focusing on testing AI agents, not just predictive and generative AI. These agents act on information, like blocking APIs in security or controlling machinery in military applications. This makes them mission-critical. We believe AI agent evaluation is the next frontier, more so than LLM evaluation. Maybe this is controversial, but I think LLM eval is a short-term play that will be fixed on the model providers side. Our research and R&D are geared towards agents and AGI, which we expect to see significant developments in next year.

Lastly, tell us a bit about the culture at Kolena - are you hiring, and what do you look for in prospective members joining the team?

We are a team of 42-43 people and we are hiring. There are a few key things we look for in candidates. First, we're obsessed with our customers and the problem we're solving. We start from the problem and emphasize a sense of ownership in implementing solutions.

The other thing I focus on is whether a candidate can get excited about something. For example, what do you do outside of work? It's not just about hobbies; it's about whether a person can get genuinely excited. I look for that in every conversation I have with a candidate. Innovation and hard work come when people are passionate about something. True ownership follows passion. So, if I find someone who can get excited, my job is to put the right incentive and project in front of them to ignite that excitement.

So it comes down to technical abilities, sense of ownership, and the ability to get excited about something.

Conclusion

To stay up to date on the latest with Kolena, follow them on X and learn more about them at Kolena.

Read our past few Deep Dives below:

If you would like us to ‘Deep Dive’ a founder, team or product launch, please reply to this email (newsletter@cerebralvalley.ai) or DM us on Twitter or LinkedIn.

Join Slack | All Events | Jobs