Our chat with Groq's Chief Evangelist, Mark Heaps (Pt. 1)

Mark on Groq's founding story, why LPUs > GPUs, and 'that' viral moment...

Published 07 Mar 2024CV Deep Dive

Today, we’re talking to Mark Heaps, Chief Evangelist and SVP of Brand at Groq.

Groq is a startup at the center of the AI-driven chip revolution, and is the brainchild of Jonathan Ross, who was previously a lead inventor of Google’s TPU (Tensor Processing Unit). Founded in 2016, Groq’s stated mission is to ‘set the standard for AI inference speed’ via its proprietary LPU (Language Processing Unit), a new type of end-to-end processing unit system that provides the fastest inference' for computationally intensive applications such as LLMs.

Just two weeks ago, Groq went viral on X for its demos of GroqChat - a consumer-facing application that allows users to query any model via a text input, similar in UI to ChatGPT. Almost overnight, thousands of users began posting snippets of Groq’s blazingly-fast output speeds - seemingly far quicker than anything previously seen via alternative LLMs products. In just a few weeks, Groq’s user numbers have shot up from under 10,000 developers to over 370,000 in a single week.

Today, Groq’s API waitlist numbers in the tens of thousands, and the team is fielding strong demand from Fortune 50s and individual developers alike - all clamoring to incorporate Groq into their own AI applications, as the global race for compute heats up. The startup has received backing from Chamath Palihapitiya’s Social Capital, Tiger Global and D1 Capital, amongst others.

In Part 1 of this conversation, Mark walks us through the founding story of Groq, why LPUs outperform GPUs, and that viral moment.

Let’s dive in ⚡️

Read time: 8 mins

Our Chat with Mark 💬

Mark - welcome to Cerebral Valley! It’s been an incredible few weeks for the Groq team. Firstly, take us through the founding story of Groq, and Jonathan’s background coming from Google.

This really depends on how far back you want to go! Jonathan, our CEO and founder, was the lead inventor of Google’s TPU, which has its own story that culminated in a very notable invention. While at the peak of his career at Google, he grew deeply concerned that AI was going to create a world of ‘haves’ and ‘have nots’’ over the next decade.

Even in 2015, he could tell that there were going to be 5 or 6 corporations in control of all of the major AI systems - including foreign governments - and he felt that everyday developers and innovators were going to be shut out from accessing this transformational technology. As a result, he decided to leave Google and start his own company that would pursue AI for the benefit of all developers.

Groq itself was formed in 2016 after Jonathan’s time at Google. One of my favorite stories is that Groq was actually formed right next to the swimming pool at Jonathan's apartment - the founding team wrote out 3 priorities for the company and started sketching a compiler on a napkin!

From his time working on TPUs, Jonathan understood the key problem with GPUs well: they are well-suited for training AI models, but not at all for real-time AI inference. His insight was that there needed to be a more performant chip for inference in order to serve the thousands of models getting trained on GPUs and deployed into production.

The analogy here was the supply chain - if you’re growing a lot of fruit, somebody eventually has to make wine! He realized that GPUs, including SOTA processors like H100s, have a ceiling when it comes to operations that are under 1-second - otherwise known as ‘real-time AI’. Products like Siri or Alexa, or Waymo, or real-time chat for customer support, are all examples of this, and Jonathan’s original question was “how do we solve the low-latency, real time problem?”

This is what forced the Groq team to invent this generation of our architecture.

Groq has taken a unique approach in that your original innovation was a compiler, which is a software component. What was the thinking behind pursuing software innovation first?

The vision was always clear - to solve the real-time AI problem and get to ultra-low latency performance. The journey there presented all kinds of challenges - how do you do true linear scaling, for example, when you’ve got to invent within the existing architecture? We’ve always taken a first principles approach to each of the core elements of our chip - even our connections from chip to chip are a proprietary invention. All of these radical pieces of innovation, though, have been in service to providing the greatest inference and low latency performance possible.

We actually started by designing the compiler first, which is practically unheard of in chip-making. Usually, it's easier for companies to start with the hardware and then instruct the developers to make the software work on the existing architecture. But, although he designs hardware, Jonathan actually is a software developer - and so he understood the software-first approach, and decided to start with designing the compiler software first.

When Groq invented the compiler, it took three separate teams and multiple rounds of innovation to get it right - after which they asked “how do we design the silicon to map to this?” We’ve spent the next few years answering that - getting chips, navigating the supply chain and ordering from the foundries. People don’t realize our chips are actually made in the US and packaged in Canada, which means we don't have the international supply chain challenges that other folks do - which makes us very attractive to specific types of companies and agencies using AI.

It feels like the world first learned about Groq and LPUs just two weeks ago, despite the company having been working on this problem since 2016. What led to this huge moment of recognition?

This recent virality moment has been magical. We've been building away and sticking to our vision, and we simply needed the right community to take notice. Between 2016 and 2024, we’d been awarded two ISCA papers for our designs, which is practically unheard of for a chip company, and our designs had also been permeating the semiconductor community for a while. This looks like an overnight success, but we’ve been at it for 8 years.

I’d say that a couple of different factors led to this moment. First, we finally reached a point of software maturity where we have over 800 models from HuggingFace compiled in our system. We’ve been aggregating and scraping regularly, and testing each model in our compiler. This process of intense dog-fooding has been key for our software maturation.

The second part was going completely against convention in saying “let's open the floodgates and let the developer world play with it”. Jonathan and I got together, and he suggested that we make the Groq demo the homepage of our website, just to see what would happen. At most, we thought it would be great if we could get a few thousand people playing with it and realizing how fast we really are. We had no idea this would all happen - today, we're at millions of API requests daily, which is unbelievable.

In 2023, we saw an explosion in the field of LLMs and generative AI. How important were the releases of Llama 2 and Mistral, among others, to Groq’s progress over the past 12 months?

Those releases were massive - we owe everything to the open source community, and we shouldn’t ever understate that. When Llama got leaked, one of our engineers, Bill Xing, found it online and said “hey team, does anybody mind if I take some time and see if I can compile this just like we do with HuggingFace models?” Within 48 hours, he had it running on our system, despite the fact that he had to remove a lot of the embedded GPU code in order to make it run effectively!

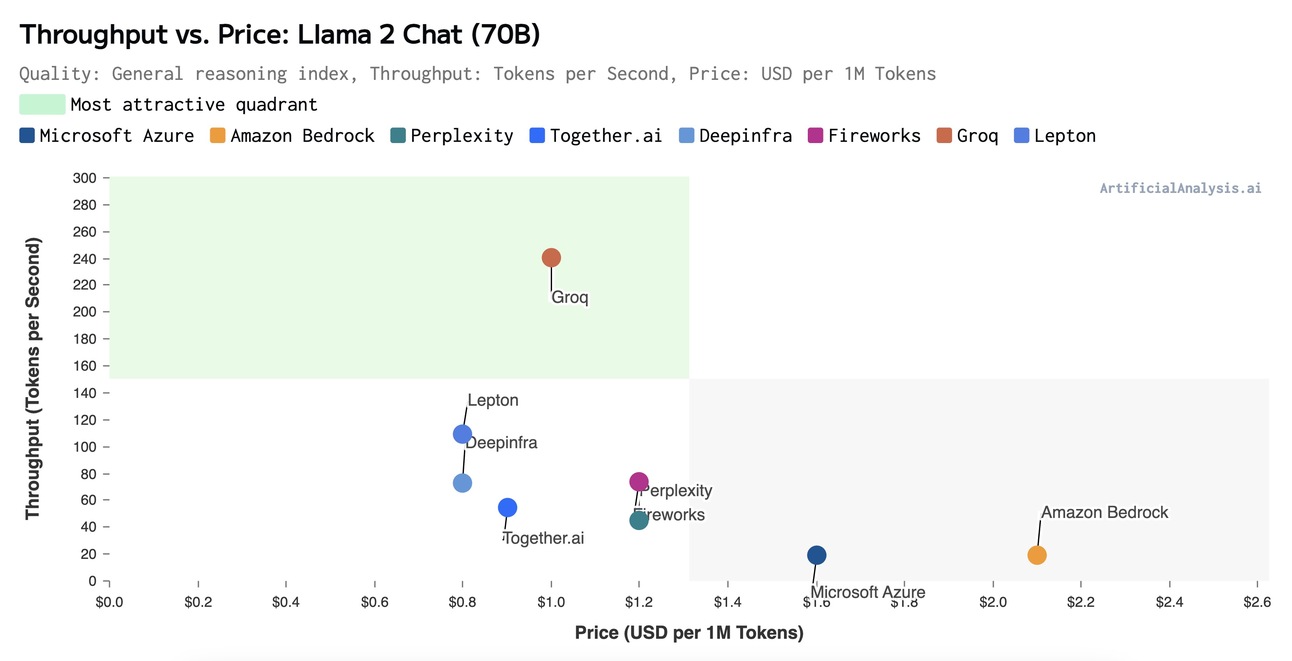

Our compiler was at a different level of maturity then, but we did a quick internal benchmark and realized that without any fitting to the silicon for optimization, we were orders of magnitude faster than what others were saying they were getting out of Llama for inference. Suddenly, we had a moment where, despite the science and the awards validating our speed, an everyday consumer-level opportunity appeared in front of us that showcased how fast LPUs could be. That realization was massive for us.

That said, I don't think the prevalence of those moments from the open-source community would have garnered the attention they did had there also not been the parallel of communities learning from OpenAI and ChatGPT. We had a massive wave of people getting educated about prompting and LLMs, and we found ourselves in a position to say “hey, that's a transformer model and we can make it dramatically faster”. The foundation of our architecture is incredibly well-suited to transformer-based models.

Shortly after that, Llama 2 released, and we showed consistency in that demonstrability. From there, we incorporated Vicuna, then Falcon, and then suddenly Mistral with MoE. Every step of the way, we've been able to insert ourselves alongside the innovation of these communities and these developers as much as we’re able to.

The question on everybody’s mind - what exactly is the difference between a GPU and an LPU?

We look at LPUs as their own category of chip, or as a new processor category altogether.

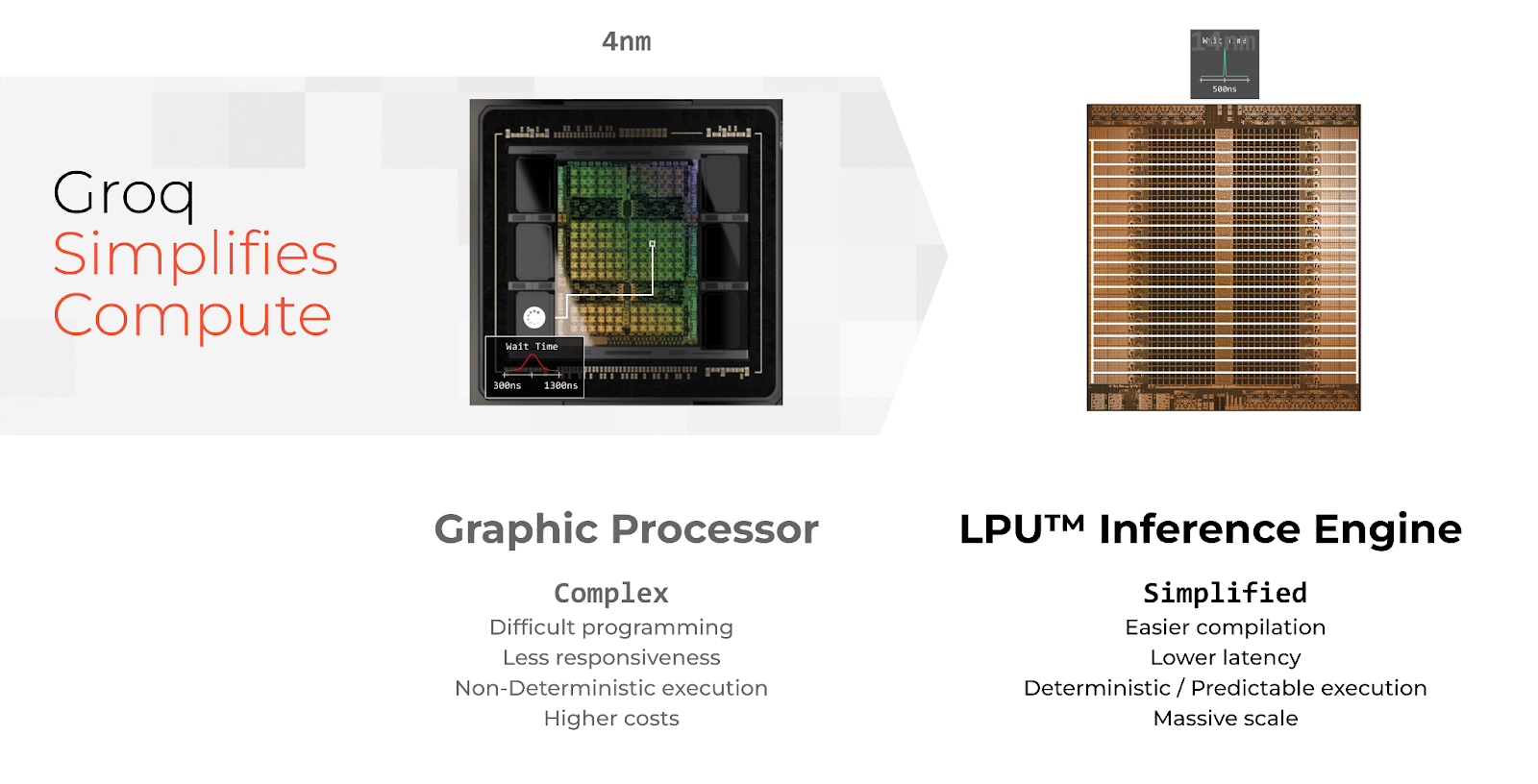

The first key thing to understand is that LPUs are deterministic. GPUs are multicore, which means they use schedulers to send data via different parts of the chip to different cores, and require management between kernels using CUDA. LPUs don’t have any of that - there are no schedulers, so when we compile an application and measure its performance, we know exactly how it will perform when run and deployed. This is key to understanding the application’s efficiency - stripping away the need for scheduling is really where we gain a lot of our performance advantage.

The second difference is that we use SRAM as a key element within our hardware specs, which is really fast for switching information and moving through data with the model, whereas other chip companies might be using HBM and DRAM. The analogy that we like to use is related to commuting: imagine you’re driving in a city like Delhi in India, which is notably jam-packed and complicated - cars sometimes don’t follow traffic lights, and people get places in a much more inefficient way. This is akin to a GPU. And now, imagine you’re driving in Manhattan, which is designed as a grid with no stoplights at all, and everybody knows when to go and when to stop. This is more like an LPU.

So, this is where we gain two advantages - efficiency and scalability. According to NVIDIA’s own publications, every additional eight GPUs or so, you begin to see a decrease in performance and an increase in latency - there’s a point where things nearly flatten out on the curve and latency cannot go higher than that ceiling. LPUs start at that point where GPUs flatten out - which means that GPUs are still better suited for smaller applications. But, once you start getting to data-center scale and beyond, we’re advantaged - because when you take our chips and plug them together, you can scale up to thousands of chips and our system will still perceive it as one chip with many cores. And, because they’re linear, the data flows through them beautifully.

We recently announced that our second chip design will be fabbed by Samsung here in Texas just outside of Austin. It’s a huge leap from 14nm to 4nm. If we didn’t optimize the design at all, we’d still see an additional 3x performance improvement in latency and power usage, which is very powerful. Data flows across our chip in ‘superlanes’ that go east to west across the chip, meaning that we can pipe in all of these different queues on the project - which means it's much more performant with regards to low latency.

This would not be a great chip design if you wanted to use it for video games or video rendering in 3D - but that’s not what it's designed for. This is also why we tell people we don't do training, because training is very well-suited to GPUs with multicores and parallel elements happening out of sequence. But, when you want to generate language - meaning the 100th word after the 99th word - you want to make sure that 99th word is generated first. When you think about audio signals, this is a linear set of data that has to be in order. These are the areas that we're really exceptional at. Think of Language as anything Linear!

Scalability is a major area of focus for any chip company - how are LPUs better suited to handle large inference workloads than, say, a GPU?

For us to be valuable to our users, we ultimately have to start at scale. The way we think about it is: imagine you had to take 800 people to San Francisco from LA. One way to do this would be to have all 800 people ride bikes up individually. Alternatively, you could put that same number of people on a jumbo-jet, and that would be much more efficient. If 10 people want to go to SF, the bike makes more sense from the standpoints of efficiency related to cost, power, usage and deliverability. But, when you need to start with a high number of requests that you want returned in real-time speed, that's when Groq steps in.

The great thing about the LPU’s deterministic design is that we can scale up to thousands of chips, because we've created a proprietary chip-to-chip connection built into the actual chip design. This is what allows us to create data ‘superlanes’ and expand on them. Let's say you had a model and wanted to install it on 512 chips - this is totally doable, and is a massive system compared to a couple of GPUs. But, you’d then want to optimize for your user base or for various applications, which you can do with a single line of code.

In our toolset we have a tool called GroqFlow, where you can explore running your model on 64, 128 or 512 chips. As long as chips are connected, you can piece it together however you want and you still won’t need all of those schedulers. So, what this means for us is that we’re building very large systems to create optionality for developers and for the enterprise. This gives us opportunities to explore provisioning and all sorts of other techniques from a business standpoint.

You’ve had a massive influx of user demand over the past few weeks. Who are you primarily seeing demand from - individual developers or large enterprises?

We’re seeing a ton of demand across the board, from Fortune 50s all the way to individual developers - and the common first question in each of our customer interactions is “how is Groq possibly this fast?!” Once they realize how unique our approach to inference is, customers immediately start getting excited about the real-time AI applications they could build on top of us. An example could be a large enterprise using Groq for responding to customer service calls, where previously the lag between the voice chatbot and the human customer was too large to make such a service work reliably.

We’re also seeing a wide variety of use-cases including AI copilots, financial trading platforms and content generation that uses streaming data. Truly, anything that involves chat or audio with real-time text-to-speech and requires fast inference has been shown to work well with our chips. We’re also seeing a ton of interest in using Groq to answer questions about data from proprietary models - which gives us the opportunity to explore fine-tuning and RAG services…

Developers are in a frenzy trying to get access to the Groq API, especially after demos of GroqChat went viral. How are you thinking about rolling out API access?

We owe a huge shout-out to Matt Schumer, Matt Wolfe and a number of the AI community voices, including yours, that have been very kind to us in bringing attention to Groq. We were getting around ten qualified customer leads per week when we first opened our early access program, but those customers had always given us “wow” responses despite never having heard of us. Suddenly, we’ve shot to hundreds of thousands of API requests per week, and so we’re slowly approving people for API access. This has been one of the toughest challenges that we’ve experienced during this sudden growth. Now we have the Playground on GroqCloud and this is rapidly accelerating our onboarding process.

One interesting question we’re considering internally is: do we take a small slice of those users and give them full, unthrottled access just to see what Groq is capable of handling? Or, do we give access to a much larger audience with the understanding that they would have to be rate-limited due to current capacity - which is what OpenAI did with ChatGPT? We’ve been working internally to find the right requests-per-minute limit to enable developers to do really good work, but also serve a broad range of people. We’ve barely slept in the last 4 days with all the incoming traffic!

That said, we’ve committed a significant amount of our budget to deploying racks across our data centers each week, with the goal of reaching hundreds of racks by the end of this year. The volume of requests has been huge, and we’re committed to serving our community and customers at scale. We’re on the same journey that OpenAI was 18 months ago, so thank you for being patient. We promise you, more API access to Groq is coming!

Conclusion

Stay tuned for Part 2! To stay up to date on the latest with Groq, follow them on X(@GroqInc)and sign up at Groq.com.

Read our past few Deep Dives below:

If you would like us to ‘Deep Dive’ a founder, team or product launch, please reply to this email (newsletter@cerebralvalley.ai) or DM us on Twitter or LinkedIn.

Join Slack | All Events | Jobs | Home